openstack私有云

1.虚拟化管理平台

kvm宿主机 500台

kvm虚拟机 5000台

kvm虚拟机的特点: 虚拟机内部不是透明的,容器内部是透明的

统计centos7.6 系统的虚拟机有多少台?

每个虚拟机的ip地址

统计4c8g 虚拟机数量 2c4g 虚拟机数量

资产表 excel文件

kvm管理平台: 小规模:ovirt WebVirtMgr cloudstack zstack Proxmox VE.....

大规模:openstack 阿里专有云 腾讯专有云 青云 ucloud.....

2.云计算发展历史

kvm管理平台 + 计费系统 == 公有云

亚马逊 2006年上线aws 公有云

阿里云 2011年上线

openstack 2012年 飞速发展 2017年 国内云厂商大规模倒闭

openstack 基于apache 2.0

3.openstack介绍

一开始模仿aws,后来加入自己的特色,兼容aws,开源,社区非常活跃

半年更新一次版本

A,B,C,D....K版L M N O Pike Q R S T U V W X Y Z版

L版的中文官方文档https://docs.openstack.org/liberty/zh_CN/install-guide-rdo/

M版的中文官方文档https://docs.openstack.org/mitaka/zh_CN/install-guide-rdo/

N版的中文官方文档https://docs.openstack.org/newton/zh_CN/install-guide-rdo/

最后一个中文版:https://docs.openstack.org/ocata/zh_CN/install-guide-rdo/

P版开始,官方文档大变样,不适合新手学习

T版以后,需要centos8的系统

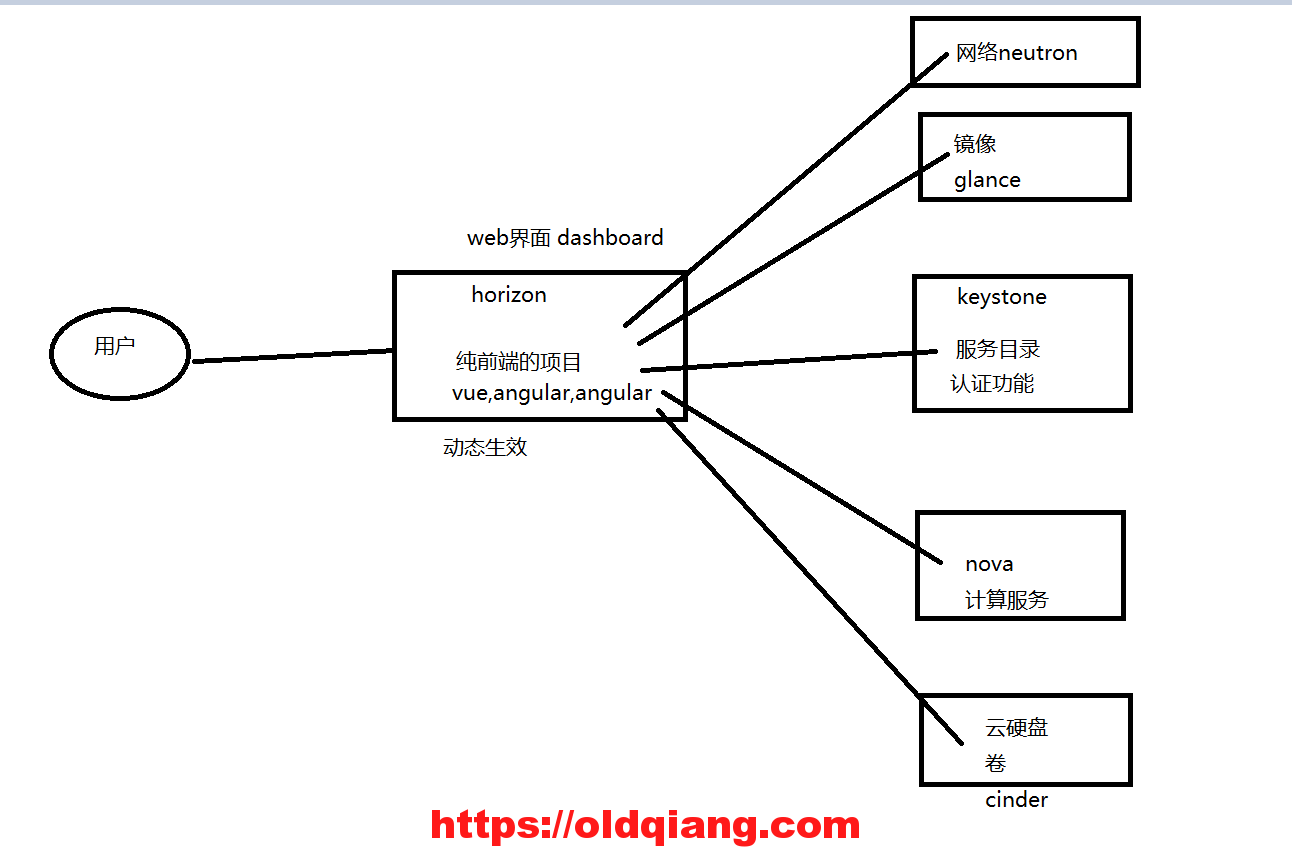

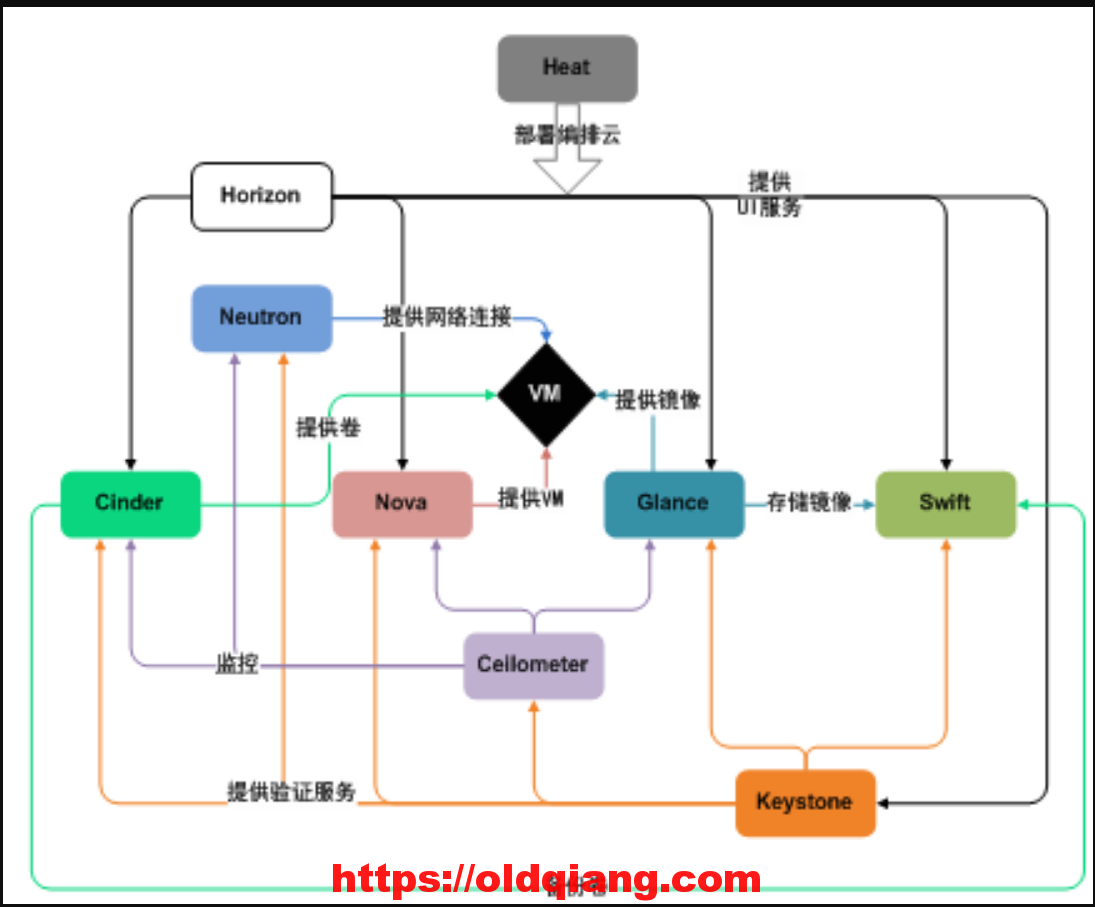

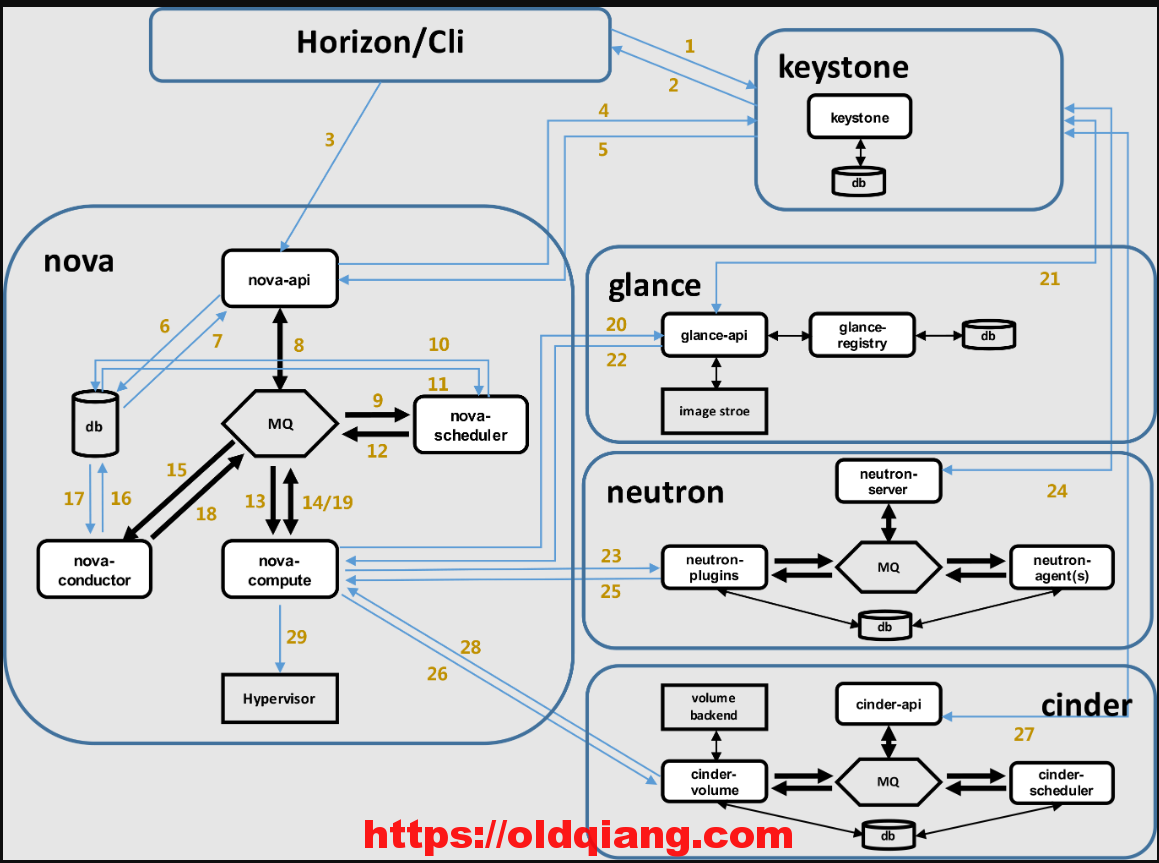

4.openstack架构

模仿aws,大规模的云平台产品

5.openstack集群环境准备

| 主机名 | ip | 虚拟化 | 内存 | |

|---|---|---|---|---|

| 控制节点 | controller | 10.0.0.11 | 开启 | 4G |

| 计算节点 | compute1 | 10.0.0.31 | 开启 | 1G |

| 计算节点 | compute2 | 10.0.0.32 | 开启 | 1G |

一定要修改ip地址,主机名,host解析

yum源准备

xecho '10.0.0.1 mirrors.aliyun.com' >>/etc/hostscurl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repoyum makecache yum install centos-release-openstack-ocata.noarch -yvim /etc/yum.repos.d/CentOS-QEMU-EV.repo #mirrorlist=http://mirrorlist.centos.org/?release=$releasever&arch=$basearch&repo=virt-kvm-commonbaseurl=http://mirror.centos.org/$contentdir/$releasever/virt/$basearch/kvm-common/vim /etc/hosts10.0.0.1 mirrors.aliyun.com mirror.centos.orgyum makecache yum repolist安装基础服务

安装openstack客户端

xxxxxxxxxx#所有节点yum install python-openstackclient -y数据库安装

xxxxxxxxxx#控制节点#安装yum install mariadb mariadb-server python2-PyMySQL -y#配置vi /etc/my.cnf.d/openstack.cnfi[mysqld]bind-address = 10.0.0.11default-storage-engine = innodbinnodb_file_per_table = onmax_connections = 4096collation-server = utf8_general_cicharacter-set-server = utf8#启动systemctl start mariadbsystemctl enable mariadb#安全初始化mysql_secure_installation回车nyyyy#验证[root@controller ~]# netstat -lntup|grep 3306tcp 0 0 10.0.0.11:3306 0.0.0.0:* LISTEN 23304/mysqld 安装消息队列

xxxxxxxxxx#控制节点#安装yum install rabbitmq-server -y#启动systemctl start rabbitmq-server.service systemctl enable rabbitmq-server.service#验证[root@controller ~]# netstat -lntup|grep 5672tcp 0 0 0.0.0.0:25672 0.0.0.0:* LISTEN 23847/beam tcp6 0 0 :::5672 :::* LISTEN 23847/beam #创建用户并授权rabbitmqctl add_user openstack RABBIT_PASSrabbitmqctl set_permissions openstack ".*" ".*" ".*"安装memcache缓存

xxxxxxxxxx#控制节点#安装yum install memcached python-memcached -y#配置vim /etc/sysconfig/memcachedOPTIONS="-l 10.0.0.11"#启动systemctl start memcached.service systemctl enable memcached.service#验证[root@controller ~]# netstat -lntup|grep 11211tcp 0 0 10.0.0.11:11211 0.0.0.0:* LISTEN 24519/memcached udp 0 0 10.0.0.11:11211 0.0.0.0:* 24519/memcached 6.安装keystone

xxxxxxxxxx#控制节点创建数据库

xxxxxxxxxxCREATE DATABASE keystone; GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' \IDENTIFIED BY 'KEYSTONE_DBPASS';GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' \IDENTIFIED BY 'KEYSTONE_DBPASS';安装keystone软件包

xxxxxxxxxxyum install openstack-keystone httpd mod_wsgi -y修改配置文件

xxxxxxxxxxcp /etc/keystone/keystone.conf /etc/keystone/keystone.conf.bak>/etc/keystone/keystone.confvim /etc/keystone/keystone.confi[DEFAULT][assignment][auth][cache][catalog][cors][cors.subdomain][credential][database]connection = mysql+pymysql://keystone:KEYSTONE_DBPASS@controller/keystone[domain_config][endpoint_filter][endpoint_policy][eventlet_server][federation][fernet_tokens][healthcheck][identity][identity_mapping][kvs][ldap][matchmaker_redis][memcache][oauth1][oslo_messaging_amqp][oslo_messaging_kafka][oslo_messaging_notifications][oslo_messaging_rabbit][oslo_messaging_zmq][oslo_middleware][oslo_policy][paste_deploy][policy][profiler][resource][revoke][role][saml][security_compliance][shadow_users][signing][token]provider = fernet[tokenless_auth][trust]同步数据库

xxxxxxxxxxsu -s /bin/sh -c "keystone-manage db_sync" keystone初始化fernet

xxxxxxxxxxkeystone-manage fernet_setup --keystone-user keystone --keystone-group keystonekeystone-manage credential_setup --keystone-user keystone --keystone-group keystone初始化keystone

xxxxxxxxxxkeystone-manage bootstrap --bootstrap-password ADMIN_PASS \ --bootstrap-admin-url http://controller:35357/v3/ \ --bootstrap-internal-url http://controller:5000/v3/ \ --bootstrap-public-url http://controller:5000/v3/ \ --bootstrap-region-id RegionOne配置httpd

xxxxxxxxxxecho "ServerName controller" >>/etc/httpd/conf/httpd.confln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/启动httpd

xxxxxxxxxxsystemctl start httpdsystemctl enable httpd验证keystone

xxxxxxxxxxvim admin-openrciexport OS_USERNAME=adminexport OS_PASSWORD=ADMIN_PASSexport OS_PROJECT_NAME=adminexport OS_USER_DOMAIN_NAME=Defaultexport OS_PROJECT_DOMAIN_NAME=Defaultexport OS_AUTH_URL=http://controller:35357/v3export OS_IDENTITY_API_VERSION=3source admin-openrc获取token测试

xxxxxxxxxxopenstack token issue创建services项目

xxxxxxxxxxopenstack project create --domain default \ --description "Service Project" service7.安装glance

xxxxxxxxxx#控制节点1:创建数据库,授权

xxxxxxxxxxCREATE DATABASE glance;GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' \ IDENTIFIED BY 'GLANCE_DBPASS';GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' \ IDENTIFIED BY 'GLANCE_DBPASS';2:在keystone创建服务用户,并关联角色

xxxxxxxxxxopenstack user create --domain default --password GLANCE_PASS glanceopenstack role add --project service --user glance admin3:在keystone上注册api访问地址

xxxxxxxxxxopenstack service create --name glance \ --description "OpenStack Image" imageopenstack endpoint create --region RegionOne \ image public http://controller:9292openstack endpoint create --region RegionOne \ image internal http://controller:9292openstack endpoint create --region RegionOne \ image admin http://controller:92924:安装软件包

xxxxxxxxxxyum install openstack-glance -y5:修改配置文件

修改glance-api配置文件

xxxxxxxxxxcp /etc/glance/glance-api.conf /etc/glance/glance-api.conf.bak>/etc/glance/glance-api.confvim /etc/glance/glance-api.confi[DEFAULT][cors][cors.subdomain][database]connection = mysql+pymysql://glance:GLANCE_DBPASS@controller/glance[glance_store]stores = file,httpdefault_store = filefilesystem_store_datadir = /var/lib/glance/images/[image_format][keystone_authtoken]auth_uri = http://controller:5000auth_url = http://controller:35357memcached_servers = controller:11211auth_type = passwordproject_domain_name = defaultuser_domain_name = defaultproject_name = serviceusername = glancepassword = GLANCE_PASS[matchmaker_redis][oslo_concurrency][oslo_messaging_amqp][oslo_messaging_kafka][oslo_messaging_notifications][oslo_messaging_rabbit][oslo_messaging_zmq][oslo_middleware][oslo_policy][paste_deploy]flavor = keystone[profiler][store_type_location_strategy][task][taskflow_executor]修改glance-registry配置文件

xxxxxxxxxxcp /etc/glance/glance-registry.conf /etc/glance/glance-registry.conf.bak>/etc/glance/glance-registry.confvim /etc/glance/glance-registry.confi[DEFAULT][database]connection = mysql+pymysql://glance:GLANCE_DBPASS@controller/glance[keystone_authtoken]auth_uri = http://controller:5000auth_url = http://controller:35357memcached_servers = controller:11211auth_type = passwordproject_domain_name = defaultuser_domain_name = defaultproject_name = serviceusername = glancepassword = GLANCE_PASS[matchmaker_redis][oslo_messaging_amqp][oslo_messaging_kafka][oslo_messaging_notifications][oslo_messaging_rabbit][oslo_messaging_zmq][oslo_policy][paste_deploy]flavor = keystone[profiler]6:同步数据库

xxxxxxxxxxsu -s /bin/sh -c "glance-manage db_sync" glance7:启动服务

xxxxxxxxxxsystemctl start openstack-glance-api.service openstack-glance-registry.service systemctl enable openstack-glance-api.service openstack-glance-registry.service8:验证

xxxxxxxxxx#上传镜像cirros-0.3.4-x86_64-disk.imgopenstack image create "cirros" --file cirros-0.3.4-x86_64-disk.img --disk-format qcow2 --container-format bare --public8.安装nova

8.1控制节点

1:创建数据库,授权

xxxxxxxxxxCREATE DATABASE nova_api;CREATE DATABASE nova;CREATE DATABASE nova_cell0;GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' \ IDENTIFIED BY 'NOVA_DBPASS';GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' \ IDENTIFIED BY 'NOVA_DBPASS';GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' \ IDENTIFIED BY 'NOVA_DBPASS';GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' \ IDENTIFIED BY 'NOVA_DBPASS';GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'localhost' \ IDENTIFIED BY 'NOVA_DBPASS';GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' \ IDENTIFIED BY 'NOVA_DBPASS';2:在keystone创建服务用户,并关联角色

xxxxxxxxxxopenstack user create --domain default --password NOVA_PASS novaopenstack role add --project service --user nova adminopenstack user create --domain default --password PLACEMENT_PASS placementopenstack role add --project service --user placement admin3:在keystone上注册api访问地址

xxxxxxxxxxopenstack service create --name nova \ --description "OpenStack Compute" computeopenstack endpoint create --region RegionOne \ compute public http://controller:8774/v2.1openstack endpoint create --region RegionOne \ compute internal http://controller:8774/v2.1openstack endpoint create --region RegionOne \ compute admin http://controller:8774/v2.1openstack service create --name placement --description "Placement API" placementopenstack endpoint create --region RegionOne placement public http://controller:8778openstack endpoint create --region RegionOne placement internal http://controller:8778openstack endpoint create --region RegionOne placement admin http://controller:87784:安装软件包

xxxxxxxxxxyum install openstack-nova-api openstack-nova-conductor \ openstack-nova-console openstack-nova-novncproxy \ openstack-nova-scheduler openstack-nova-placement-api -y5:修改配置文件

修改nova配置文件

xxxxxxxxxxcp /etc/nova/nova.conf /etc/nova/nova.conf.bak>/etc/nova/nova.confvim /etc/nova/nova.confi[DEFAULT]enabled_apis = osapi_compute,metadatatransport_url = rabbit://openstack:RABBIT_PASS@controllermy_ip = 10.0.0.11use_neutron = Truefirewall_driver = nova.virt.firewall.NoopFirewallDriver[api]auth_strategy = keystone[api_database]connection = mysql+pymysql://nova:NOVA_DBPASS@controller/nova_api[barbican][cache][cells][cinder][cloudpipe][conductor][console][consoleauth][cors][cors.subdomain][crypto][database]connection = mysql+pymysql://nova:NOVA_DBPASS@controller/nova[ephemeral_storage_encryption][filter_scheduler][glance]api_servers = http://controller:9292[guestfs][healthcheck][hyperv][image_file_url][ironic][key_manager][keystone_authtoken]auth_uri = http://controller:5000auth_url = http://controller:35357memcached_servers = controller:11211auth_type = passwordproject_domain_name = defaultuser_domain_name = defaultproject_name = serviceusername = novapassword = NOVA_PASS[libvirt][matchmaker_redis][metrics][mks][neutron][notifications][osapi_v21][oslo_concurrency]lock_path = /var/lib/nova/tmp[oslo_messaging_amqp][oslo_messaging_kafka][oslo_messaging_notifications][oslo_messaging_rabbit][oslo_messaging_zmq][oslo_middleware][oslo_policy][pci][placement]os_region_name = RegionOneproject_domain_name = Defaultproject_name = serviceauth_type = passworduser_domain_name = Defaultauth_url = http://controller:35357/v3username = placementpassword = PLACEMENT_PASS[quota][rdp][remote_debug][scheduler][serial_console][service_user][spice][ssl][trusted_computing][upgrade_levels][vendordata_dynamic_auth][vmware][vnc]enabled = truevncserver_listen = $my_ipvncserver_proxyclient_address = $my_ip[workarounds][wsgi][xenserver][xvp]修改placement配置

xxxxxxxxxxvim /etc/httpd/conf.d/00-nova-placement-api.conf#在</VirtualHost>前插入以下内容<Directory /usr/bin> <IfVersion >= 2.4> Require all granted </IfVersion> <IfVersion < 2.4> Order allow,deny Allow from all </IfVersion></Directory>启动placement

xxxxxxxxxxsystemctl restart httpd6:同步数据库

xxxxxxxxxxsu -s /bin/sh -c "nova-manage api_db sync" novasu -s /bin/sh -c "nova-manage cell_v2 map_cell0" novasu -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" novasu -s /bin/sh -c "nova-manage db sync" nova验证

xxxxxxxxxxnova-manage cell_v2 list_cells7:启动服务

xxxxxxxxxxsystemctl start openstack-nova-api.service \ openstack-nova-consoleauth.service openstack-nova-scheduler.service \ openstack-nova-conductor.service openstack-nova-novncproxy.servicesystemctl enable openstack-nova-api.service \ openstack-nova-consoleauth.service openstack-nova-scheduler.service \ openstack-nova-conductor.service openstack-nova-novncproxy.service8:验证

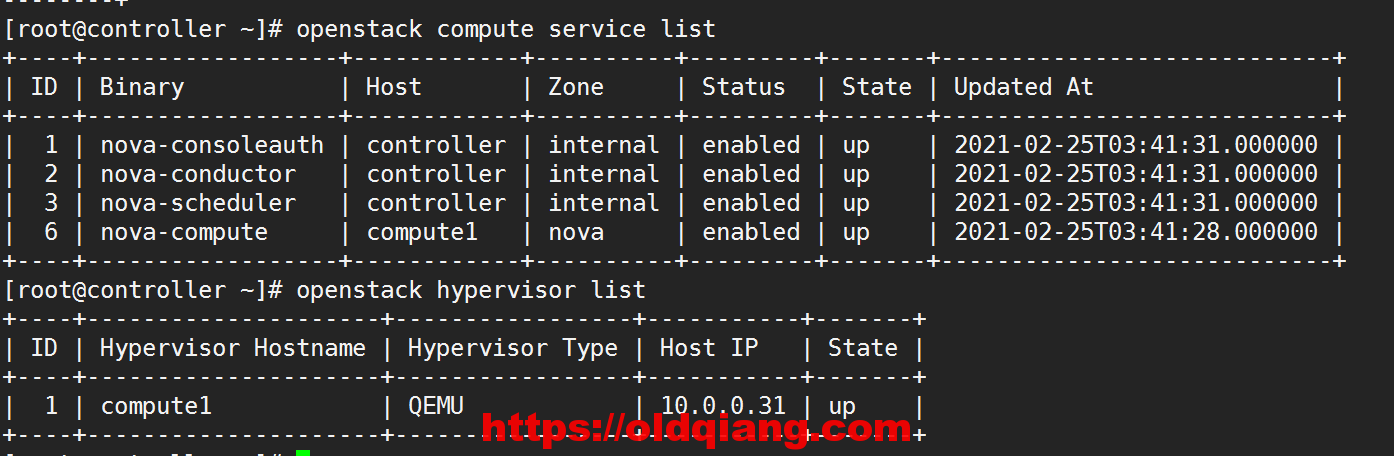

xxxxxxxxxxnova service-list8.2计算节点

1.安装

xxxxxxxxxxyum install openstack-nova-compute -y2.配置

xxxxxxxxxxcp /etc/nova/nova.conf /etc/nova/nova.conf.bak>/etc/nova/nova.confvim /etc/nova/nova.confi[DEFAULT]enabled_apis = osapi_compute,metadatatransport_url = rabbit://openstack:RABBIT_PASS@controllermy_ip = 10.0.0.31use_neutron = Truefirewall_driver = nova.virt.firewall.NoopFirewallDriver[api]auth_strategy = keystone[api_database][barbican][cache][cells][cinder][cloudpipe][conductor][console][consoleauth][cors][cors.subdomain][crypto][database][ephemeral_storage_encryption][filter_scheduler][glance]api_servers = http://controller:9292[guestfs][healthcheck][hyperv][image_file_url][ironic][key_manager][keystone_authtoken]auth_uri = http://controller:5000auth_url = http://controller:35357memcached_servers = controller:11211auth_type = passwordproject_domain_name = defaultuser_domain_name = defaultproject_name = serviceusername = novapassword = NOVA_PASS[libvirt][matchmaker_redis][metrics][mks][neutron][notifications][osapi_v21][oslo_concurrency]lock_path = /var/lib/nova/tmp[oslo_messaging_amqp][oslo_messaging_kafka][oslo_messaging_notifications][oslo_messaging_rabbit][oslo_messaging_zmq][oslo_middleware][oslo_policy][pci][placement]os_region_name = RegionOneproject_domain_name = Defaultproject_name = serviceauth_type = passworduser_domain_name = Defaultauth_url = http://controller:35357/v3username = placementpassword = PLACEMENT_PASS[quota][rdp][remote_debug][scheduler][serial_console][service_user][spice][ssl][trusted_computing][upgrade_levels][vendordata_dynamic_auth][vmware][vnc]enabled = Truevncserver_listen = 0.0.0.0vncserver_proxyclient_address = $my_ipnovncproxy_base_url = http://controller:6080/vnc_auto.html[workarounds][wsgi][xenserver][xvp]3.启动

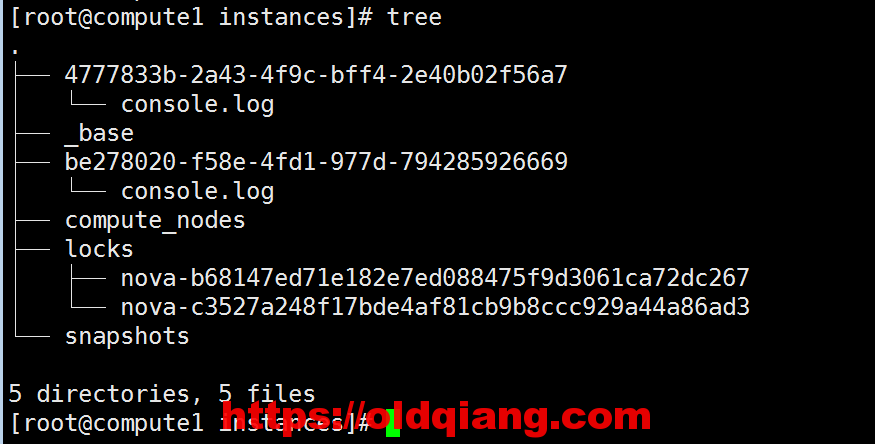

xxxxxxxxxxsystemctl start libvirtd openstack-nova-compute.service systemctl enable libvirtd openstack-nova-compute.service重要步骤

控制节点

xxxxxxxxxxsu -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

9.安装neutron

9.1控制节点

1:创建数据库,授权

xxxxxxxxxxCREATE DATABASE neutron;GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' \ IDENTIFIED BY 'NEUTRON_DBPASS';GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' \ IDENTIFIED BY 'NEUTRON_DBPASS';2:在keystone创建服务用户,并关联角色

xxxxxxxxxxopenstack user create --domain default --password NEUTRON_PASS neutronopenstack role add --project service --user neutron admin3:在keystone上注册api访问地址

xxxxxxxxxxopenstack service create --name neutron \ --description "OpenStack Networking" networkopenstack endpoint create --region RegionOne \ network public http://controller:9696openstack endpoint create --region RegionOne \ network internal http://controller:9696openstack endpoint create --region RegionOne \ network admin http://controller:96964:安装软件包

xxxxxxxxxxyum install openstack-neutron openstack-neutron-ml2 \ openstack-neutron-linuxbridge ebtables -y5:修改配置文件

修改neutron配置文件

xxxxxxxxxxcp /etc/neutron/neutron.conf /etc/neutron/neutron.conf.bak>/etc/neutron/neutron.confvim /etc/neutron/neutron.confi[DEFAULT]core_plugin = ml2service_plugins =transport_url = rabbit://openstack:RABBIT_PASS@controllerauth_strategy = keystonenotify_nova_on_port_status_changes = truenotify_nova_on_port_data_changes = true[agent][cors][cors.subdomain][database]connection = mysql+pymysql://neutron:NEUTRON_DBPASS@controller/neutron[keystone_authtoken]auth_uri = http://controller:5000auth_url = http://controller:35357memcached_servers = controller:11211auth_type = passwordproject_domain_name = defaultuser_domain_name = defaultproject_name = serviceusername = neutronpassword = NEUTRON_PASS[matchmaker_redis][nova]auth_url = http://controller:35357auth_type = passwordproject_domain_name = defaultuser_domain_name = defaultregion_name = RegionOneproject_name = serviceusername = novapassword = NOVA_PASS[oslo_concurrency]lock_path = /var/lib/neutron/tmp[oslo_messaging_amqp][oslo_messaging_kafka][oslo_messaging_notifications][oslo_messaging_rabbit][oslo_messaging_zmq][oslo_middleware][oslo_policy][qos][quotas][ssl]修改ml2_conf.ini

xxxxxxxxxxcp /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugins/ml2/ml2_conf.ini.bak>/etc/neutron/plugins/ml2/ml2_conf.inivim /etc/neutron/plugins/ml2/ml2_conf.inii[DEFAULT][ml2]type_drivers = flat,vlantenant_network_types =mechanism_drivers = linuxbridgeextension_drivers = port_security[ml2_type_flat]flat_networks = provider[ml2_type_geneve][ml2_type_gre][ml2_type_vlan][ml2_type_vxlan][securitygroup]enable_ipset = true修改linuxbridge-agent配置

xxxxxxxxxxcp /etc/neutron/plugins/ml2/linuxbridge_agent.ini /etc/neutron/plugins/ml2/linuxbridge_agent.ini.bak>/etc/neutron/plugins/ml2/linuxbridge_agent.inivim /etc/neutron/plugins/ml2/linuxbridge_agent.inii[DEFAULT][agent][linux_bridge]physical_interface_mappings = provider:eth0[securitygroup]enable_security_group = truefirewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver[vxlan]enable_vxlan = false修改dhcp配置

xxxxxxxxxxvim /etc/neutron/dhcp_agent.ini[DEFAULT]interface_driver = linuxbridgedhcp_driver = neutron.agent.linux.dhcp.Dnsmasqenable_isolated_metadata = true修改metadata-agent配置

xxxxxxxxxxvim /etc/neutron/metadata_agent.ini[DEFAULT]nova_metadata_ip = controllermetadata_proxy_shared_secret = METADATA_SECRET修改nova.conf配置

xxxxxxxxxx#控制节点vim /etc/nova/nova.conf[neutron]url = http://controller:9696auth_url = http://controller:35357auth_type = passwordproject_domain_name = defaultuser_domain_name = defaultregion_name = RegionOneproject_name = serviceusername = neutronpassword = NEUTRON_PASSservice_metadata_proxy = truemetadata_proxy_shared_secret = METADATA_SECRET6:同步数据库

xxxxxxxxxxln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.inisu -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf \ --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron7:启动服务

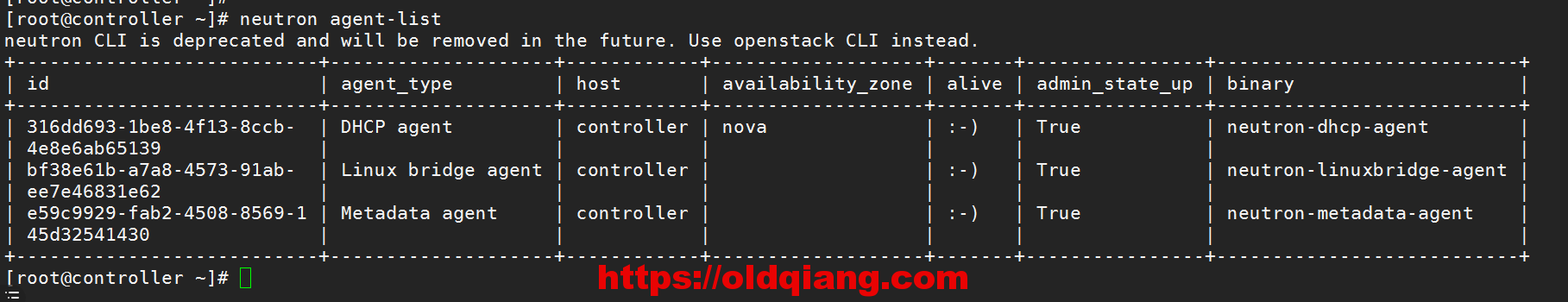

xxxxxxxxxxsystemctl restart openstack-nova-api.servicesystemctl start neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.servicesystemctl enable neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service8:验证

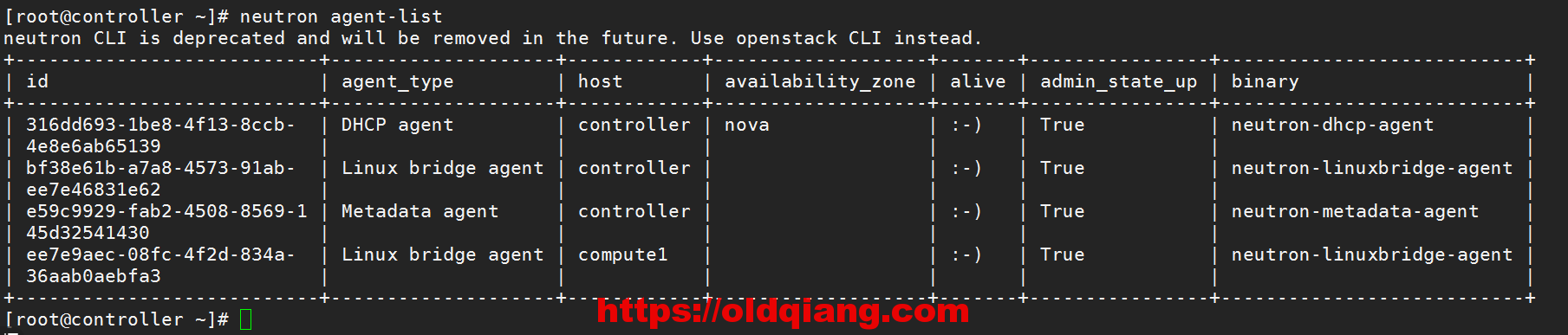

9.2计算节点

1.安装

xxxxxxxxxxyum install openstack-neutron-linuxbridge ebtables ipset -y2.配置

修改neutron.conf

xxxxxxxxxxcp /etc/neutron/neutron.conf /etc/neutron/neutron.conf.bak>/etc/neutron/neutron.confvim /etc/neutron/neutron.confi[DEFAULT]transport_url = rabbit://openstack:RABBIT_PASS@controllerauth_strategy = keystone[agent][cors][cors.subdomain][database][keystone_authtoken]auth_uri = http://controller:5000auth_url = http://controller:35357memcached_servers = controller:11211auth_type = passwordproject_domain_name = defaultuser_domain_name = defaultproject_name = serviceusername = neutronpassword = NEUTRON_PASS[matchmaker_redis][nova][oslo_concurrency]lock_path = /var/lib/neutron/tmp[oslo_messaging_amqp][oslo_messaging_kafka][oslo_messaging_notifications][oslo_messaging_rabbit][oslo_messaging_zmq][oslo_middleware][oslo_policy][qos][quotas][ssl]修改linuxbridge_agent.ini

xxxxxxxxxxcp /etc/neutron/plugins/ml2/linuxbridge_agent.ini /etc/neutron/plugins/ml2/linuxbridge_agent.ini.bak>/etc/neutron/plugins/ml2/linuxbridge_agent.inivim /etc/neutron/plugins/ml2/linuxbridge_agent.inii[DEFAULT][agent][linux_bridge]physical_interface_mappings = provider:eth0[securitygroup]enable_security_group = truefirewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver[vxlan]enable_vxlan = false修改nova.conf

xxxxxxxxxx#计算节点vim /etc/nova/nova.conf[neutron]url = http://controller:9696auth_url = http://controller:35357auth_type = passwordproject_domain_name = defaultuser_domain_name = defaultregion_name = RegionOneproject_name = serviceusername = neutronpassword = NEUTRON_PASS3.启动

xxxxxxxxxxsystemctl restart openstack-nova-compute.servicesystemctl start neutron-linuxbridge-agent.servicesystemctl enable neutron-linuxbridge-agent.service4.验证

10.安装horizon

1.安装

xxxxxxxxxx#计算节点 yum install openstack-dashboard -y2.配置

xxxxxxxxxx详情,参考官方文档/etc/openstack-dashboard/local_settings3.启动

xxxxxxxxxxsystemctl start httpdsystemctl enable httpd4:访问dashboard

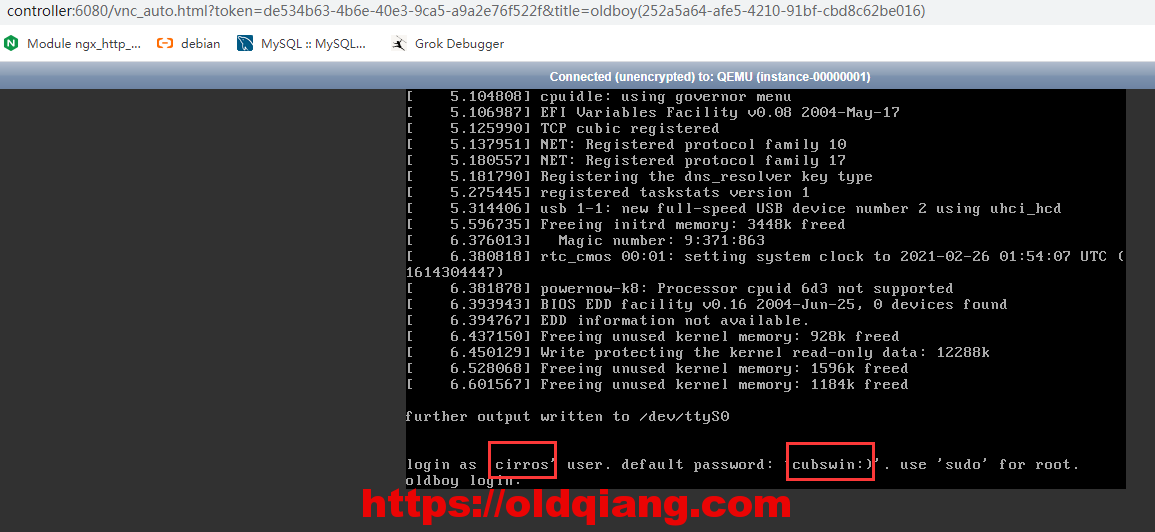

11.启动实例

1.创建网络

命令行创建

xxxxxxxxxxopenstack network create --share --external \ --provider-physical-network provider \ --provider-network-type flat wanweb界面创建

创建子网

命令行

xxxxxxxxxxopenstack subnet create --network wan \ --allocation-pool start=10.0.0.100,end=10.0.0.200 \ --dns-nameserver 180.76.76.76 --gateway 10.0.0.254 \ --subnet-range 10.0.0.0/24 10.0.0.0

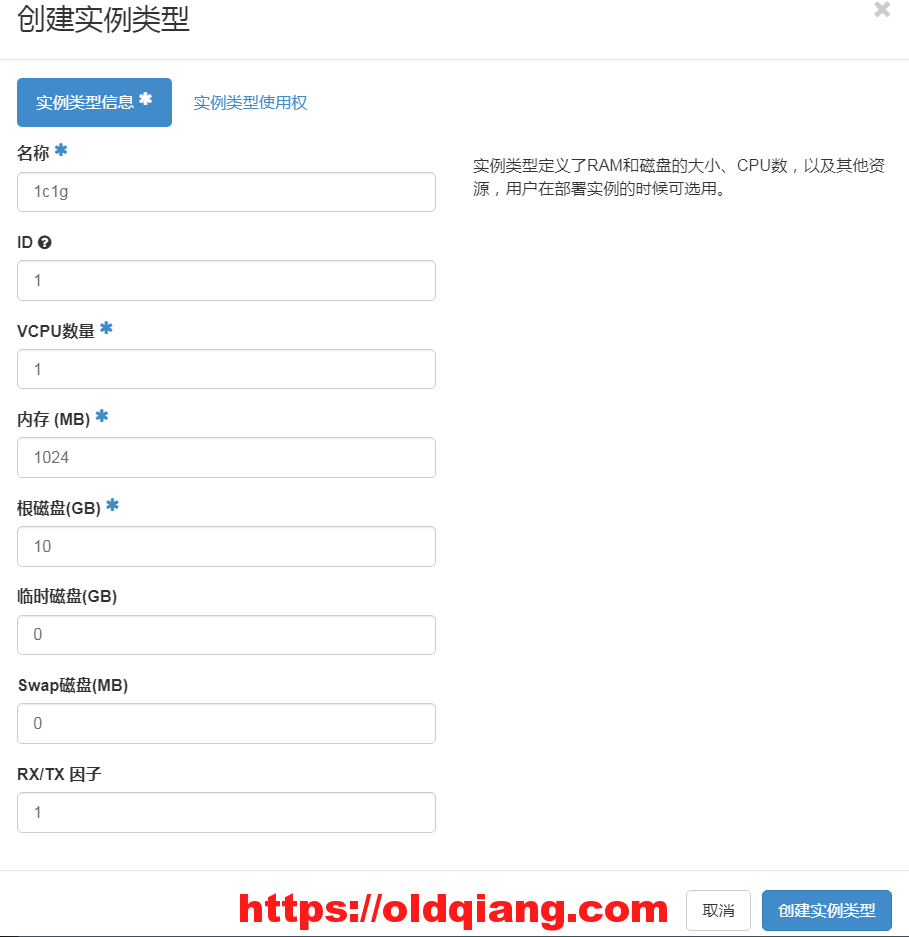

创建虚拟机硬件配置方案

命令行

xxxxxxxxxxopenstack flavor create --id 0 --vcpus 1 --ram 64 --disk 1 m1.nanoweb界面创建

创建键值对

启动实例

命令行

xxxxxxxxxxopenstack server create --flavor m1.nano --image cirros \ --nic net-id=0d517510-2e7e-4be4-a948-d0e4b207b2cd --security-group default \ --key-name mykey oldboy

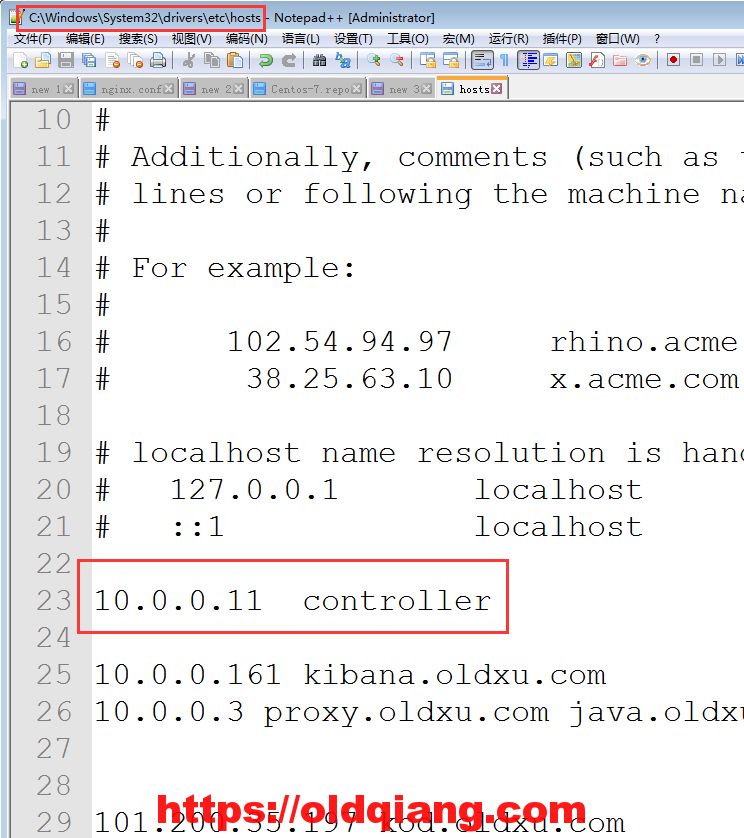

记得修改windows的host解析

12.故障解决

xxxxxxxxxx#计算节点vim /etc/nova/nova.conf[libvirt]cpu_mode = nonevirt_type = qemusystemctl restart openstack-nova-compute.service硬重启虚拟机

13. openstack虚拟机性能优化

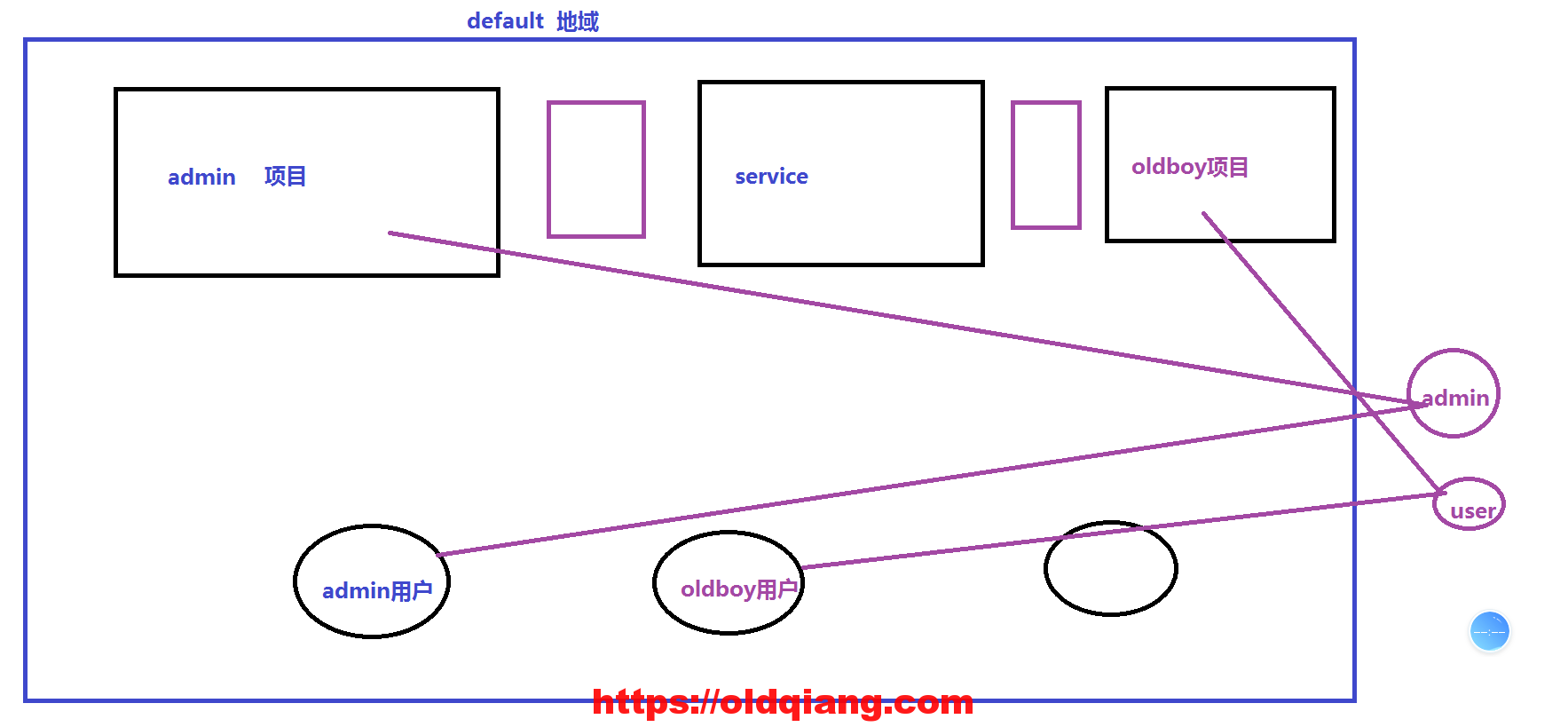

xxxxxxxxxx#计算节点vim /etc/nova/nova.conf[libvirt]virt_type = kvmhw_machine_type = x86_64=pc-i440fx-rhel7.2.0cpu_mode = host-passthroughsystemctl restart openstack-nova-compute.service1414.项目,用户,角色之间的关系

15.上传镜像

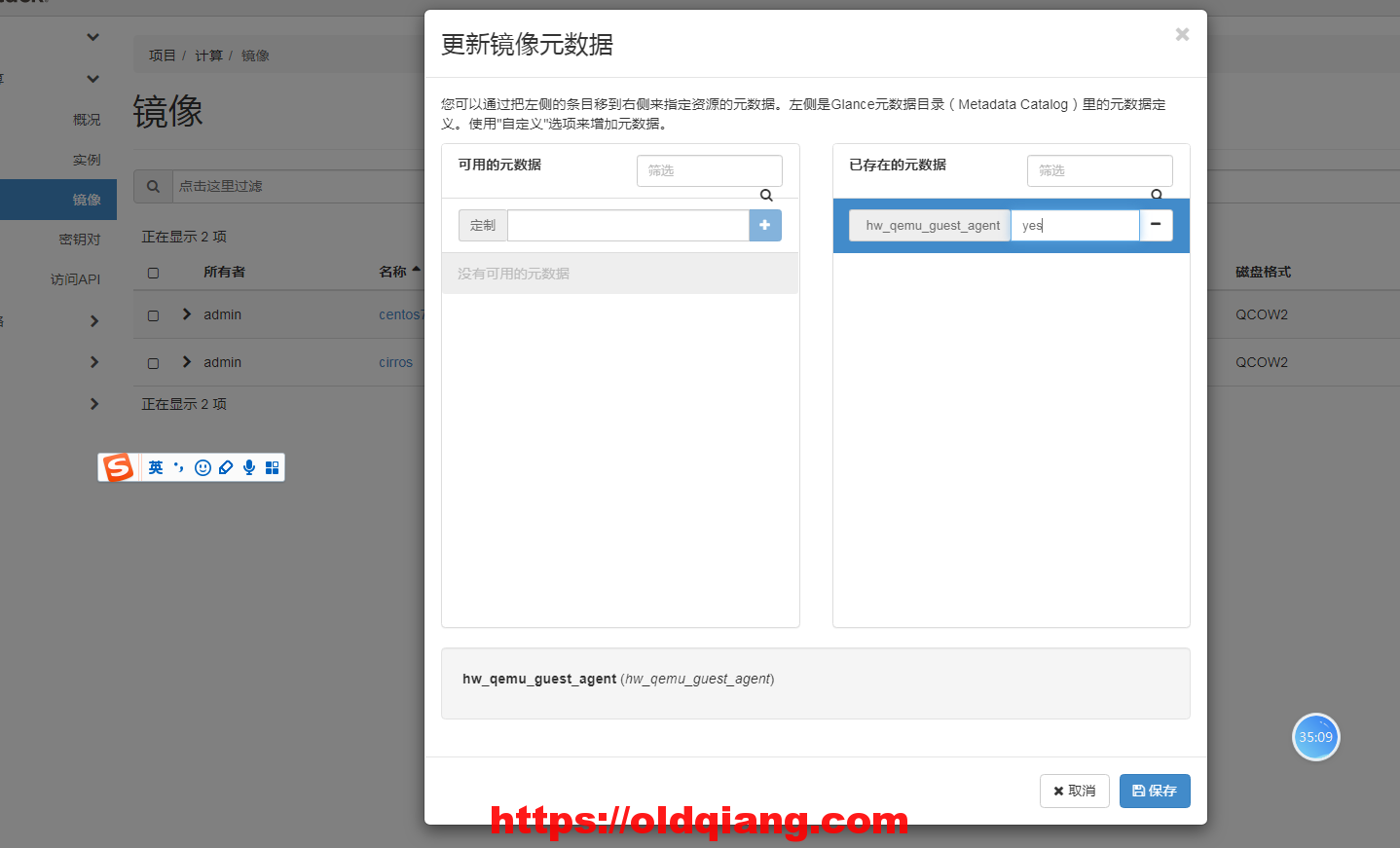

xxxxxxxxxxopenstack image create "centos7.2" --file web03.qcow2 --disk-format qcow2 --container-format bare --public#添加元数据hw_qemu_guest_agent=yes

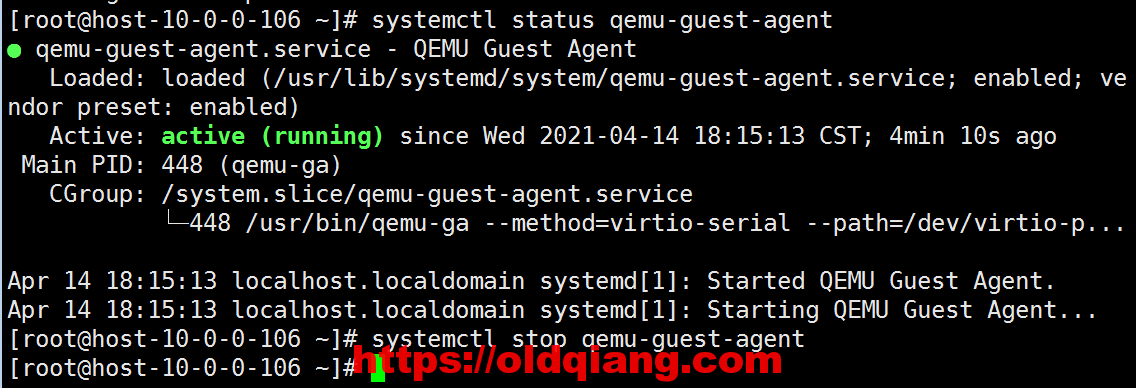

修改密码测试

先检查实例qga服务是否运行

xxxxxxxxxxnova set-password centos7New password: Again: 重置实例状态

xxxxxxxxxxnova reset-state centos7 --active16.cinder块存储服务

16.1控制节点

xxxxxxxxxx#控制节点1:mysql创库授权

xxxxxxxxxxCREATE DATABASE cinder;GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'localhost' \ IDENTIFIED BY 'CINDER_DBPASS';GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'%' \ IDENTIFIED BY 'CINDER_DBPASS';2.在keystone上创建用户,关联角色

xxxxxxxxxxopenstack user create --domain default --password CINDER_PASS cinderopenstack role add --project service --user cinder admin3.keystone注册api

xxxxxxxxxxopenstack service create --name cinderv2 \ --description "OpenStack Block Storage" volumev2openstack endpoint create --region RegionOne \ volumev2 public http://controller:8776/v2/%\(project_id\)sopenstack endpoint create --region RegionOne \ volumev2 internal http://controller:8776/v2/%\(project_id\)sopenstack endpoint create --region RegionOne \ volumev2 admin http://controller:8776/v2/%\(project_id\)s4.yum安装软件包

xxxxxxxxxxyum install openstack-cinder -y5.修改配置文件

xxxxxxxxxxcp /etc/cinder/cinder.conf /etc/cinder/cinder.conf.bak>/etc/cinder/cinder.confvim /etc/cinder/cinder.confi[DEFAULT]transport_url = rabbit://openstack:RABBIT_PASS@controllerauth_strategy = keystonemy_ip = 10.0.0.11[backend][barbican][brcd_fabric_example][cisco_fabric_example][coordination][cors][cors.subdomain][database]connection = mysql+pymysql://cinder:CINDER_DBPASS@controller/cinder[fc-zone-manager][healthcheck][key_manager][keystone_authtoken]auth_uri = http://controller:5000auth_url = http://controller:35357memcached_servers = controller:11211auth_type = passwordproject_domain_name = defaultuser_domain_name = defaultproject_name = serviceusername = cinderpassword = CINDER_PASS[matchmaker_redis][oslo_concurrency]lock_path = /var/lib/cinder/tmp[oslo_messaging_amqp][oslo_messaging_kafka][oslo_messaging_notifications][oslo_messaging_rabbit][oslo_messaging_zmq][oslo_middleware][oslo_policy][oslo_reports][oslo_versionedobjects][profiler][ssl]6.同步数据库

xxxxxxxxxxsu -s /bin/sh -c "cinder-manage db sync" cinder7.启动服务

修改控制节点nova的配置文件

xxxxxxxxxxvim /etc/nova/nova.conf[cinder]os_region_name = RegionOne启动服务

xxxxxxxxxxsystemctl restart openstack-nova-api.servicesystemctl enable openstack-cinder-api.service openstack-cinder-scheduler.servicesystemctl start openstack-cinder-api.service openstack-cinder-scheduler.service8.验证

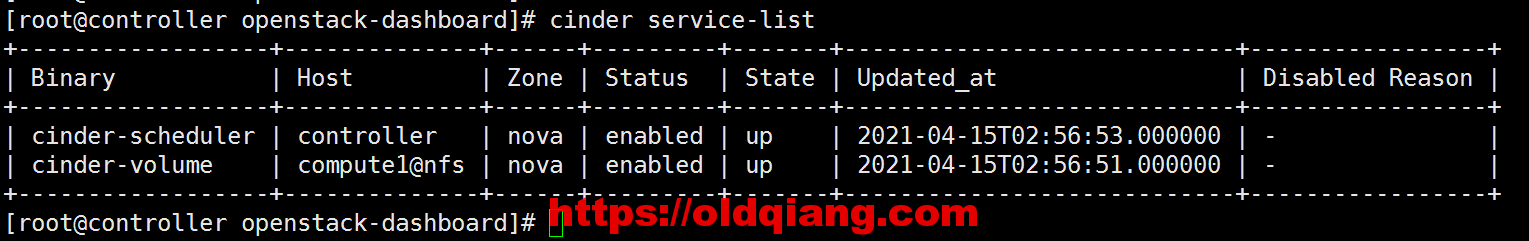

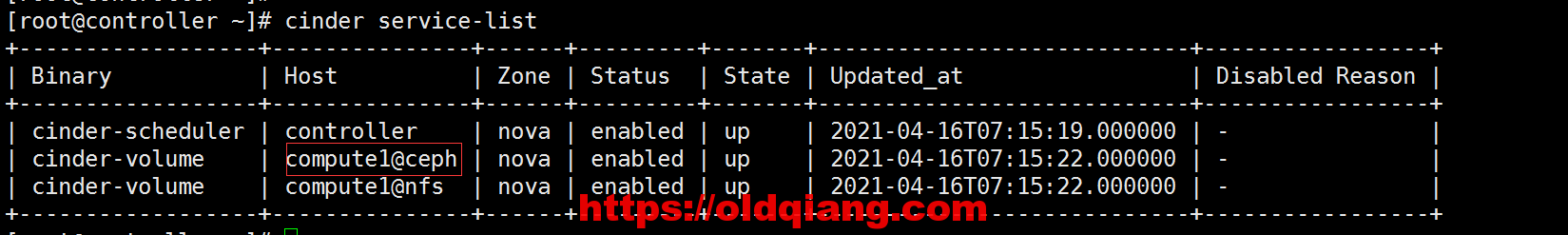

xxxxxxxxxxcinder service-list16.2cinder对接nfs后端存储

安装nfs服务端

xxxxxxxxxxyum install nfs-utils.x86_64 -ymkdir -p /dataecho '/data 10.0.0.0/24(rw,sync,no_root_squash,no_all_squash)' >/etc/exportssystemctl start nfssystemctl enable nfs安装cinder-volume

xxxxxxxxxx#计算节点1.安装

xxxxxxxxxxyum install openstack-cinder targetcli python-keystone -y2.配置

xxxxxxxxxxcp /etc/cinder/cinder.conf /etc/cinder/cinder.conf.bak>/etc/cinder/cinder.confvim /etc/cinder/cinder.confi[DEFAULT]transport_url = rabbit://openstack:RABBIT_PASS@controllerauth_strategy = keystonemy_ip = 10.0.0.31enabled_backends = nfsglance_api_servers = http://controller:9292[backend][barbican][brcd_fabric_example][cisco_fabric_example][coordination][cors][cors.subdomain][database]connection = mysql+pymysql://cinder:CINDER_DBPASS@controller/cinder[fc-zone-manager][healthcheck][key_manager][keystone_authtoken]auth_uri = http://controller:5000auth_url = http://controller:35357memcached_servers = controller:11211auth_type = passwordproject_domain_name = defaultuser_domain_name = defaultproject_name = serviceusername = cinderpassword = CINDER_PASS[matchmaker_redis][oslo_concurrency]lock_path = /var/lib/cinder/tmp[oslo_messaging_amqp][oslo_messaging_kafka][oslo_messaging_notifications][oslo_messaging_rabbit][oslo_messaging_zmq][oslo_middleware][oslo_policy][oslo_reports][oslo_versionedobjects][profiler][ssl][nfs]volume_driver=cinder.volume.drivers.nfs.NfsDrivernfs_shares_config=/etc/cinder/nfs_sharesvolume_backend_name=nfsnfs_qcow2_volumes=True创建/etc/cinder/nfs_shares配置文件

xxxxxxxxxxvim /etc/cinder/nfs_shares10.0.0.11:/data3.启动

xxxxxxxxxxsystemctl enable openstack-cinder-volume.servicesystemctl start openstack-cinder-volume.servicechown -R cinder:cinder /var/lib/cinder/mnt/4.验证

17.实例创建流程原理分析

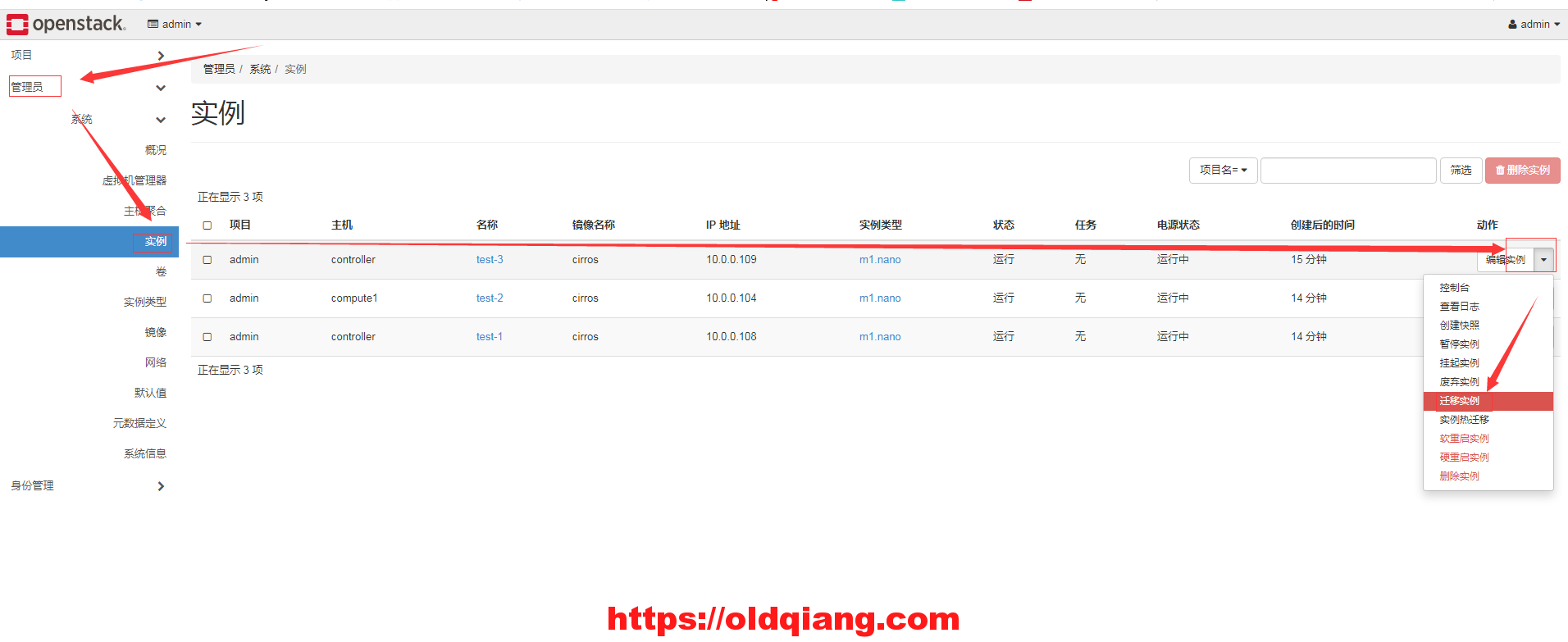

18.实例的冷迁移和硬件配置升级

冷迁移需要配置nova用户互相免密码登录

xxxxxxxxxxusermod -s /bin/bash novasu - novassh-keygen -t rsa -N '' -qcp -a id_rsa.pub authorized_keysssh nova@10.0.0.11scp -rp .ssh root@10.0.0.31:`pwd`#去节点31上面[root@compute1 cinder]# chown -R nova:nova /var/lib/nova/.ssh#回到之前的节点测试ssh nova@10.0.0.31

硬件配置升级

xxxxxxxxxx#控制节点vim /etc/nova/nova.conf[DEFAULT]allow_resize_to_same_host=truesystemctl restart openstack-nova-api.service 19.制作openstack镜像

参考官方文档https://docs.openstack.org/image-guide/centos-image.html

20.openstack对接ceph存储

安装ceph环境准备

xxxxxxxxxx添加一块硬盘200G修改ip修改主机名添加host解析执行脚本具体步骤

xxxxxxxxxx安装ceph的j版rm -fr /etc/yum.repos.d/local.repocurl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repocurl -o /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repovim /etc/yum.repos.d/ceph.repo[ceph-tool]name=ceph-toolsbaseurl=https://mirror.tuna.tsinghua.edu.cn/ceph/rpm-jewel/el7/noarch/gpgcheck=0[ceph]name=cephbaseurl=https://mirror.tuna.tsinghua.edu.cn/ceph/rpm-jewel/el7/x86_64/gpgcheck=0yum install ceph ceph-radosgw ceph-deploy -ymkdir clustercd cluster/ceph-deploy new ceph02vim ceph.conf#追加下面几行osd pool default size = 1osd crush chooseleaf type = 0osd max object name len = 256osd journal size = 128ceph-deploy mon create-initialceph-deploy osd prepare ceph02:/dev/sdbceph-deploy osd activate ceph02:/dev/sdb1检测ceph集群

xxxxxxxxxx[root@ceph01 ~]# ceph -s cluster d3853d3e-2864-4fe5-88ef-92e64d77cef3 health HEALTH_OK monmap e1: 1 mons at {ceph01=10.0.0.14:6789/0} election epoch 2, quorum 0 ceph01 osdmap e5: 1 osds: 1 up, 1 in pgmap v8: 64 pgs, 1 pools, 0 bytes data, 0 objects 33880 kB used, 199 GB / 199 GB avail 64 active+clean创建存储资源池

xxxxxxxxxxceph osd pool create openstack 128 128创建一块rbd硬盘

xxxxxxxxxxrbd create --pool openstack test.raw --size 1024rbd ls --pool openstackrbd info --pool openstack test.rawrbd map openstack/test.rawmkfs.xfs /dev/rbd0rbd resize openstack/test.raw --size 2048glance对接ceph

xxxxxxxxxx#控制节点yum install ceph-common -yscp -rp root@10.0.0.14:/etc/ceph/ceph.conf /etc/ceph/scp -rp root@10.0.0.14:/etc/ceph/ceph.client.admin.keyring /etc/ceph/chmod 777 /etc/ceph/ceph.client.admin.keyring删除所有实例和镜像修改glance-api的配置文件vim /etc/glance/glance-api.conf[DEFAULT]show_image_direct_url = True[glance_store]stores = rbddefault_store = rbdrbd_store_pool = openstackrbd_store_user = adminrbd_store_ceph_conf = /etc/ceph/ceph.confrbd_store_chunk_size = 8#stores = file,http#default_store = file#filesystem_store_datadir = /var/lib/glance/images/#重启服务systemctl restart openstack-glance-api.service#测试qemu-img convert -f qcow2 -O raw cirros-0.3.4-x86_64-disk.img cirros-0.3.4-x86_64-disk.rawopenstack image create "cirros" --file cirros-0.3.4-x86_64-disk.raw --disk-format raw --container-format bare --public验证

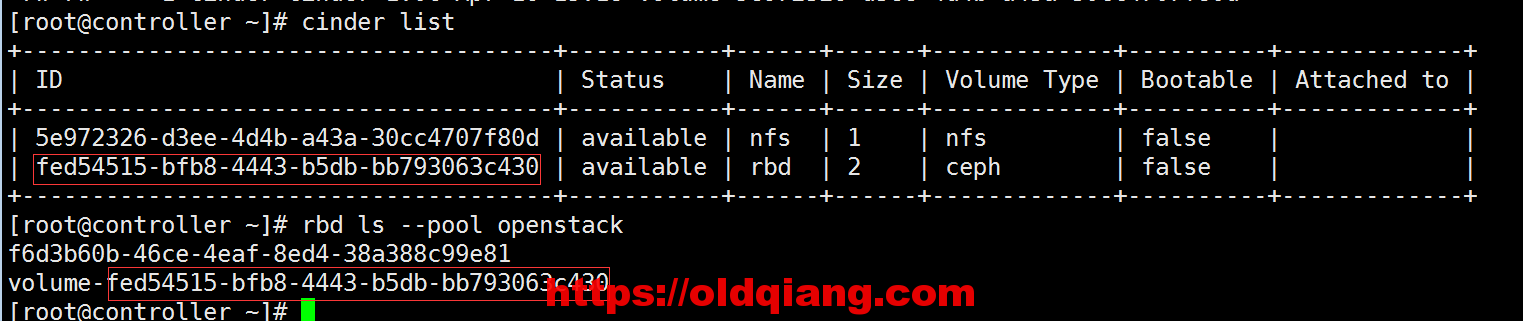

xxxxxxxxxx[root@ceph02 ~]# rbd ls --pool openstackf6d3b60b-46ce-4eaf-8ed4-38a388c99e81[root@ceph02 ~]# rbd info --pool openstack f6d3b60b-46ce-4eaf-8ed4-38a388c99e81rbd image 'f6d3b60b-46ce-4eaf-8ed4-38a388c99e81': size 40162 kB in 10 objects order 22 (4096 kB objects) block_name_prefix: rbd_data.10155a5e5f74 format: 2 features: layering, exclusive-lock, object-map, fast-diff, deep-flatten flags: cinder-volume对接ceph

xxxxxxxxxx#存储节点(计算节点)yum install ceph-common -yscp -rp root@10.0.0.14:/etc/ceph/ceph.conf /etc/ceph/scp -rp root@10.0.0.14:/etc/ceph/ceph.client.admin.keyring /etc/ceph/chmod 777 /etc/ceph/ceph.client.admin.keyringvim /etc/cinder/cinder.conf[DEFAULT]enabled_backends = nfs,ceph[ceph]volume_driver = cinder.volume.drivers.rbd.RBDDrivervolume_backend_name = cephrbd_pool = openstackrbd_ceph_conf = /etc/ceph/ceph.confrbd_flatten_volume_from_snapshot = falserbd_max_clone_depth = 5rbd_store_chunk_size = 4rados_connect_timeout = -1rbd_user = adminrbd_secret_uuid = 457eb676-33da-42ec-9a8c-9293d545c337systemctl restart openstack-cinder-volume.service验证:

xxxxxxxxxx#控制节点

nova-compute对接ceph

xxxxxxxxxxvim /etc/nova/nova.conf[DEFAULT]force_raw_images = Truedisk_cachemodes = writeback[libvirt]#...images_type = rbdimages_rbd_pool = openstackimages_rbd_ceph_conf = /etc/ceph/ceph.confrbd_user = adminrbd_secret_uuid = 457eb676-33da-42ec-9a8c-9293d545c337cat > secret.xml <<EOF<secret ephemeral='no' private='no'> <uuid>457eb676-33da-42ec-9a8c-9293d545c337</uuid> <usage type='ceph'> <name>client.admin secret</name> </usage></secret>EOFvirsh secret-define --file secret.xmlvirsh secret-set-value --secret 457eb676-33da-42ec-9a8c-9293d545c337 --base64 AQDWB3lgVzaUIxAA1OuVEQTJOjrfChXNBq++Wg==systemctl restart openstack-nova-compute验证

启动新实例

21.实例的热迁移

要求:

- 配置libvirtd免密码登录

- 虚拟机存放在共享存储中

增加flat网段 三层网络vxlan Openstack配置lbaashttps://oldqiang.com/archives/157.html

manila文件共享服务 nas存储 swift对象存储服务 oss对象 heat编排服务 编排